VIRTUAL AS WELL AS REAL LIFE AGENTS EXECUTING AND RECEIVING TASKS AND MISSIONS IN THE CORE SYSTEM

Agents in the Core System

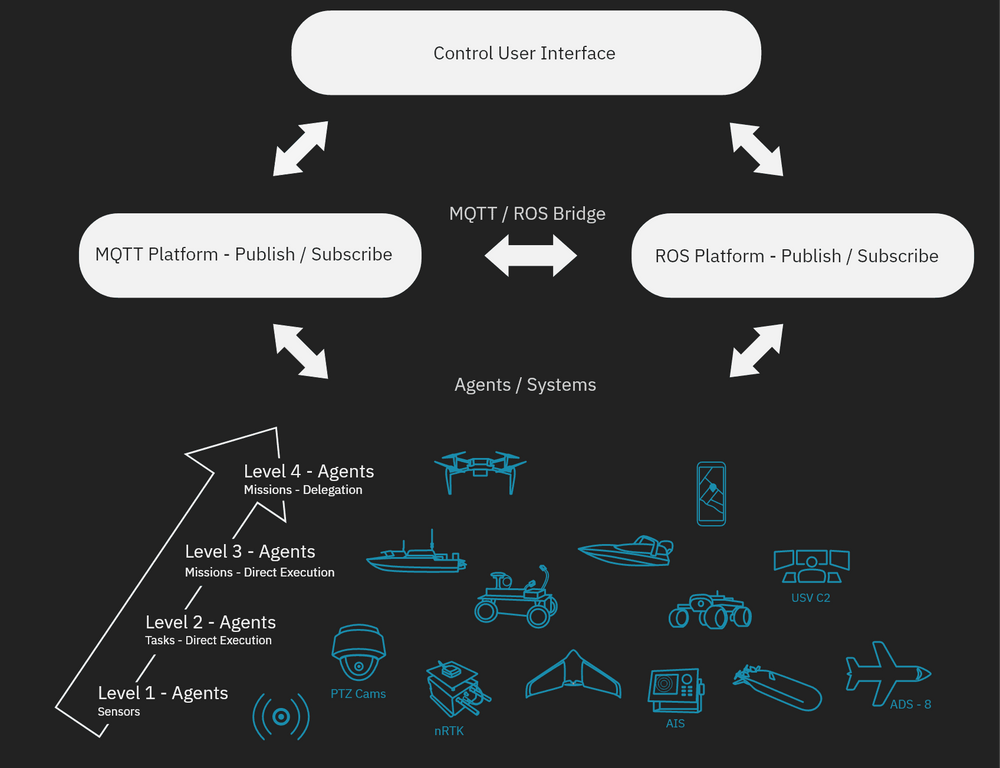

An agent is a unit that can receive and perform tasks/missions received from other agents and services in the Core System. Agents can provide the Core System with information to be shared between agents and services. The agents are categorized into four levels based on their capability of acting autonomously. Below you can find high-level descriptions of the different levels of agents.

Level 1 - Sensors

Sensors, without the need to be controlled, which provide the system with data, e.g. inspection or guarding of building or accident sites.

Level 2 - Task - manually

A task to be performed by a specific agent where the operator selects the task and appropriate agent.

Level 3 - Mission - Manually

When several agents need to cooperate, a task description is used that can contain several different tasks and agents with a common goal, e.g. to investigate a suspicious object. Both tasks and agents are chosen by the operator.

Level 4 - Mission - Autonomous delegation

Equivalent to Level 3, the operator knows the objective of a mission and based on that the operator provides the system with tasks. However which agents will carry out the mission is chosen by the system.

Both simulated and real are integrated into the core system. To support users there are code examples demonstrating how simple agents can be integrated into Atlas and the integration map. The agents are classified as simple since they can perform simple task, but do not have any logic beyond that. The code examples are available in the GitHub repo that is linked below.

INTEGRATION TO THE CORE SYSTEM

ROS

ROS1 and ROS2 are used to integrate robots into the Core System. Development environments have been set up using ROS1, and ROS2 have been used for the Linköping Robotic System (LRS) for Task Specification Trees (TSTs) and delegation as well as WARA-PS specific information. For the WARA-PS specific information, an account for the Liu Gitlab is required, if you want to access the Liu Gitlab or have questions about ROS2, please contact Liu AIICS. You can find their contact information on the Liu AIICS website, state your name and the email address that should be used. Further information can be found below.

SIMULATOR

ArduPilot SITL

The WARA-PS Software-In-The-Loop (SITL) Simulator is a simulator, built on ArduPilot, that can act as a UxV, i.e. as a multicopter, helicopter, plane, rover, boat or submarine with derivates. ArduPilot is normally executed in physical Flight Control Systems (FCS’s) and is a COTS, open sourced software with a huge community that integrates many FCS’s and related electronics as lidar sensors, GPS’s, altitude sensors etc.

Currently the simulator is used as a development tool for boats and rovers where validation tests are performed before deploying to real hardware and field tests. The simulator is built upon the same software that is running in WARA-PS Pixhawk controlled vehicles as Mini-USV’s and Mini-UGV’s.